Bayesian Optimization

TODO

- Read this animated article.

- Implement Baysian Optimization.

- What are the applications of Baysian Optimization to Reinforcement Learning?

Kinds of Bayesian Optimization

- Using baysian optimization for hyperparameter tuning

- Maximum Likelihood (ML) Estimation

- Maximum a Posteriori (MAP) Estimation

- Bayes Optimal Decision

Assumptions

We assume that the data is i.i.d. and generated ‘by nature’ according to $P(y \vert x) = f(x) + \epsilon $ with $ \epsilon \sim \mathcal{N(0, \sigma^2)}$ being random noise.

Basics

First of all, how do you calculate the likelihood of a parameterized distribution $\mathcal{N(x \vert \mu, \sigma^2)}$ with parameters $\theta = (\mu, \sigma^2)$?

Fig. 1 Likelihood

Fig. 1 Likelihood

Note: $\propto$ means ‘proportional to’. When the likelihood is scaled by a factor, we can ignore it because our argmin yields the same optimal theta.

Using baysian optimization for hyperparameter tuning

If we want to tune the hyperparameters $\lambda$ (number of layers, neurons per layer, conv parameters, $\dots$) of e.g. a neural network, the optimization criterion $f(\lambda)$ is

- expensive to evaluate.

- not differentiable (-> no gradient available).

For these kind of problems, baysian optimization is a good optimization tool.

ML Estimation

To calculate the best parameters, we can maximize the likelihood of theta (also called ML for Maximum Likelihood). This is the same as maximizing the probability of the dependend variable $Y$ given the independend variable $X$ and the parameters $\theta$.

\[\begin{equation} \theta^{*} = arg\,max_{\theta} \, L(\theta) = arg\,max_{\theta} \, \prod_{i=1}^{n} P(y_i \vert x_i, \theta) = arg\,max_{\theta} \, \log \sum_{i=1}^{n} P(y_i \vert x_i, \theta) = arg\,min_{\theta} \, \sum_{i=1}^{n} - \log P(y_i \vert x_i, \theta) \end{equation}\]Because the product gets really small, it’s much easier to compute using a sum (Otherwise it will approach 0 relatively quickly). We can transform the product to a sum using logarithms, because they are strictly monotonic and thus don’t change the minimum that we get by solving the argmin. To summarize, our optimization criterion is \(\theta^{*} = arg\,min_{\theta} \, \sum_{i=1}^{n} - \log P(y_i \vert x_i, \theta)\) and can be estimated through the equation:

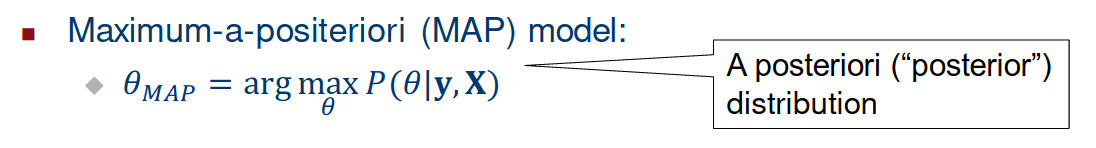

MAP Estimation

The criterion becomes:

Fig. 2 MAP optimization criterion

Fig. 2 MAP optimization criterion

which is equal to the ML model multiplied by $P(\theta)$ (taking a prior distribution of $\theta$, most of the times sampled from a multivariate Normal $\mathcal{N(\theta \vert 0, \sigma^2)}$, into account).

\[\theta_{\text{MAP}} = arg\,max_{\theta} \, P(y \vert X, \theta) P(\theta)\]The MAP estimate can be calculated very similar to the analytic solution of minimizing the regularized quadratic $L_2$ loss.

\[\theta_{\text{MAP}} = \left(X^T X + \frac{\sigma^2}{\sigma_p^2} I\right)^{-1} X^T y.\]Bayes Optimal Decision

In the bayes optimal decision we want to find the most likely $y’$ (Y is the dependend variable) for a given new datapoint $x’$. We want to optimize the optimization criterion

\[y'* = arg\,max_{y} \, P(y' \vert x', y, X)\]Apparently this is best done without having a model (although we sacrifice some time in inference). Instead of using a fixed $\theta$, we integrate over all possible thetas:

Fig. 3 Bayes Optimal Decision integral

Fig. 3 Bayes Optimal Decision integral

References

- MIT - L20.10 Maximum Likelihood Estimation Examples.

- Baysian methods have been used to compute good parameters for AlphaGo and AlphaZero, publication here.

- Scheffer, Tobias: Baysian models (slides)