Trust region methods

First draft: 2022-12-27

New Content!

Proof of relative policy performance identity:

\[\begin{align*} \max_{\pi_{\theta}} J(\pi_{\theta}) &= \max_{\pi_{\theta}} J(\pi_{\theta}) - J(\pi_{\theta_{\text{old}}}) \\ &= \max_{\pi_{\theta}} \mathbb{E}_{a_t \sim \pi_{\theta}} \left[ A_{\pi_{\theta}}(s,a) \right] \; \text{// Proof: [1]} \\ &= \max_{\pi_{\theta}} \mathbb{E}_{a_t \sim \pi_{\theta_{\text{old}}}} \left[ \frac{\pi_{\theta}(a|s)}{\pi_{\theta_{\text{old}}}(a|s)} A_{\pi_{\theta}}(s,a) \right] \; \text{// Importance Sampling} \\ \end{align*}\][1] Proof of relative policy performance identity: Joshua Achiam (2017) “Advanced Policy Gradient Methods”, UC Berkeley, OpenAI. Link: http://rail.eecs.berkeley.edu/deeprlcourse-fa17/f17docs/lecture_13_advanced_pg.pdf (slide 12)

Introduction

Hi!

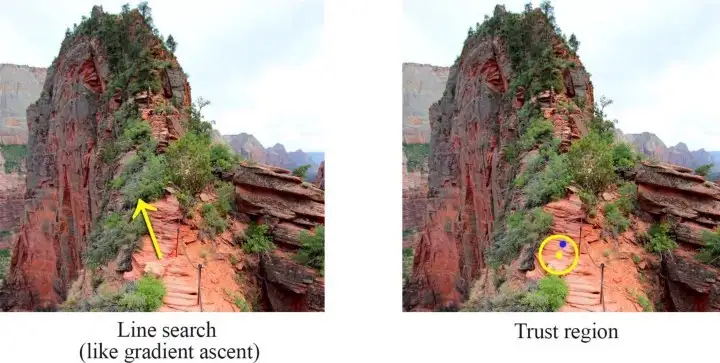

- Trust region methods: TRPO and PPO

- bad policy leads to bad data

- TRPO needs 2nd order derivative, hard to implement (?) -> just use PPO

- PPO has KL and Clip variants

- KL divergence approximation trick (see kl, some blogpost)

- Clip is easier to implement

-

Show clip plots

- tips for implementing PPO: https://iclr-blog-track.github.io/2022/03/25/ppo-implementation-details/

- code for the post above: https://github.com/vwxyzjn/ppo-implementation-details/blob/main/ppo.py

Derivation of the Surrogate Loss Function

We are starting with the objective of maximizing the Advantage, but you could also maximize some other metrics, like the state-value, state-action-value, … (the policy gradient variations are listed in my Actor Critic blogpost). Then we rewrite the formula using importance sampling to get the surrogate loss (that we want to maximize, I’m not sure why it’s called a loss..).

\[\begin{align*} J(\theta) = \mathbb{E}_{\pi_{\theta}} \left[ \hat{A}(s,a) \right] &= \sum_{s,a \sim \pi_{\theta_{\text{old}}}} \pi_{\theta}(a|s) \hat{A}^{\theta_{\text{old}}}(s,a) \\ &= \sum_{s,a \sim \pi_{\theta_{\text{old}}}} \pi_{\theta_{\text{old}}}(a|s) \frac{\pi_{\theta}(a|s)}{\pi_{\theta_{\text{old}}}(a|s)} \hat{A}^{\theta_{\text{old}}}(s,a)\\ &= \mathbb{E}_{s,a \sim \pi_{\theta_{\text{old}}}} \left[ \frac{\pi_{\theta}(a|s)}{\pi_{\theta_{\text{old}}}(a|s)} \hat{A}^{\theta_{\text{old}}}(s,a) \right] \; (\text{surrogate objective}) \\ \\ \Rightarrow \nabla J(\theta) &= \mathbb{E}_{s,a \sim \pi_{\theta_{\text{old}}}} \left[ \frac{\nabla \pi_{\theta}(a|s)}{\pi_{\theta_{\text{old}}}(a|s)} \hat{A}^{\theta_{\text{old}}}(s,a) \right] \end{align*}\]My derivation from the policy gradient:

\[\begin{align*} \nabla J(\theta) &= \mathbb{E}_{\pi_{\theta}} \left[ \nabla \log \pi_{\theta}(a|s) \hat{A}^{\theta_{\text{old}}}(s,a) \right] \\ &= \mathbb{E}_{\pi_{\theta}} \left[ \pi_{\theta_{\text{old}}}(a|s) \frac{\nabla \log \pi_{\theta}(a|s)}{\pi_{\theta_{\text{old}}}(a|s)} \hat{A}^{\theta_{\text{old}}}(s,a) \right] \\ &= \mathbb{E}_{\pi_{\theta_{\text{old}}}} \left[ \frac{\nabla \log \pi_{\theta}(a|s)}{\pi_{\theta_{\text{old}}}(a|s)} \hat{A}^{\theta_{\text{old}}}(s,a) \right]\\ \end{align*}\]TODO

- check if the derivations are correct

-

wrote Phil Winder and Pieter Abbeel

- blogpost about PPO: https://towardsdatascience.com/proximal-policy-optimization-ppo-explained-abed1952457b

Note that the importance sampling ratio (the first fraction) is also often written abbreviated, for example as $r(\theta) \dot{=} \frac{\pi_{\theta} (a|s)}{\pi_{\theta_\text{old}} (a|s)}$ ($r$ stands for ratio).

Now we can extract a loss function (that gets minimized!):

\[\Rightarrow \mathcal{L}_{\text{actor}} = - \frac{\nabla \pi_{\theta}(a|s)}{\pi_{\theta_{\text{old}}}(a|s)} A_{\text{w}}(s,a)\]PPO, Actor-Critic style, uses either a shared network for policy and value function, or two seperate networks.

TRPO

PPO

TODO

- first look at importance sampling

- then watch Pieters lecture and take notes

- then research anything that’s unclear

- implement it

References

- Thumbnail taken from here.

- Pieter Abbeel: L4 TRPO and PPO (Foundations of Deep RL Series)